SpatialLLM: Advancing Urban Spatial Intelligence through Multimodal Large Language Models for Classification, Policy, and Reasoning

Keywords:

SpatialLLM, Multimodal Data, Urban Land Use Classification, Spatial Question Answering, Policy Recommendation, Satellite Imagery, Spatial CoordinatesAbstract

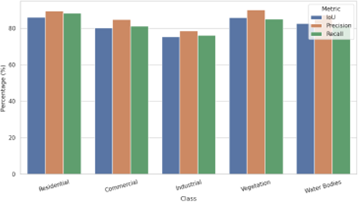

Urban environments are becoming increasingly complex, demanding advanced tools capable of synthesizing spatial, visual, and textual information to support intelligent planning, classification, and decision-making. This study presents SpatialLLM, a novel geospatially grounded large language model framework that integrates multimodal data—satellite imagery, spatial coordinates, and natural language texts—to address core urban computing tasks including land use classification, spatial question answering (QA), and policy recommendation generation. Using both public datasets and curated spatial corpora, we evaluated SpatialLLM on a suite of tasks. The model achieved a mean Intersection over Union (mIoU) of 82.4% for urban land use classification and outperformed baselines in QA with an exact match score of 83.2% and BLEU-4 of 0.81. Policy recommendations generated by the model received expert validation with an average rating of 4.31/5 across urban sustainability themes. An ablation study confirmed the critical role of cross-modal attention, where removing any modality significantly degraded performance. This research demonstrates that large language models, when spatially enriched and multimodally trained, can power next-generation urban spatial intelligence systems. The implications extend to urban planning, disaster response, and participatory governance, marking a shift toward more interpretable, adaptable, and data-driven urban policy pipelines.